How you can save money on LLM tokens as a developer with MCP / ChatGPT apps

Really?

I alluded to that in one of the previous posts. But I want to emphasise this point in a separate blog post. If you're building an app that calls an LLM, you can package it it as an MCP or a ChatGPT app (which runs on top of MCP) it can save you money on LLM tokens. Furthermore, once the ChatGPT app store is alive it can also become a powerful distribution channel.

If you are building a web / mobile app

Imagine you're writing an app that helps you to learn a foreign language. More specifically, it generates a audio dialogue in a foreign language for a specific topic and based on your profiency. For example, you can ask it:

I'm a beginner learner of Italian. Generate me a short dialogue about going on holidays.

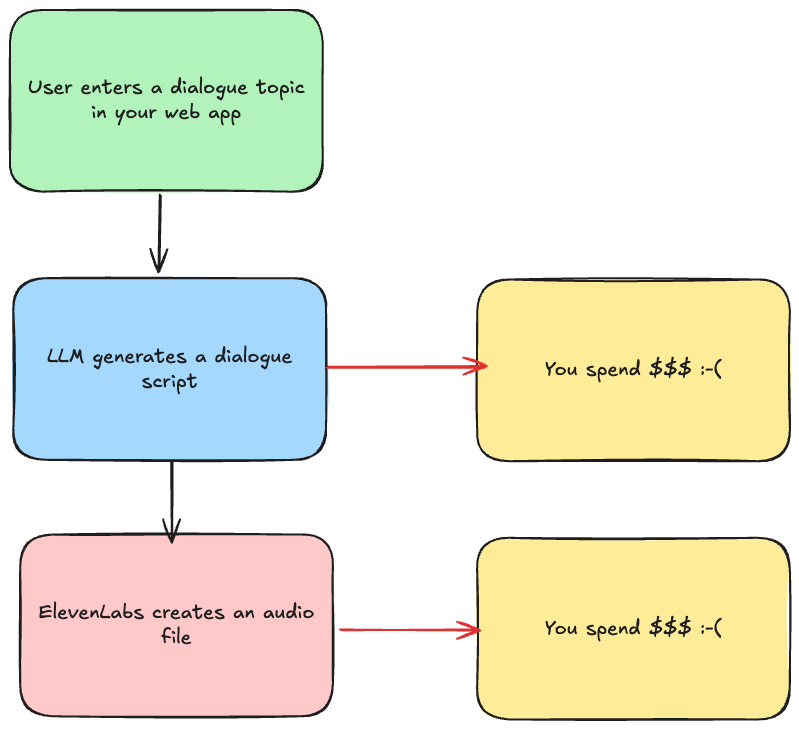

So if you are developing it as a web app or a mobile app would probably have the following sequence:

So you spend money twice:

- First for generating a dialogue script - calling an LLM

- Second for calling a text-to-speech

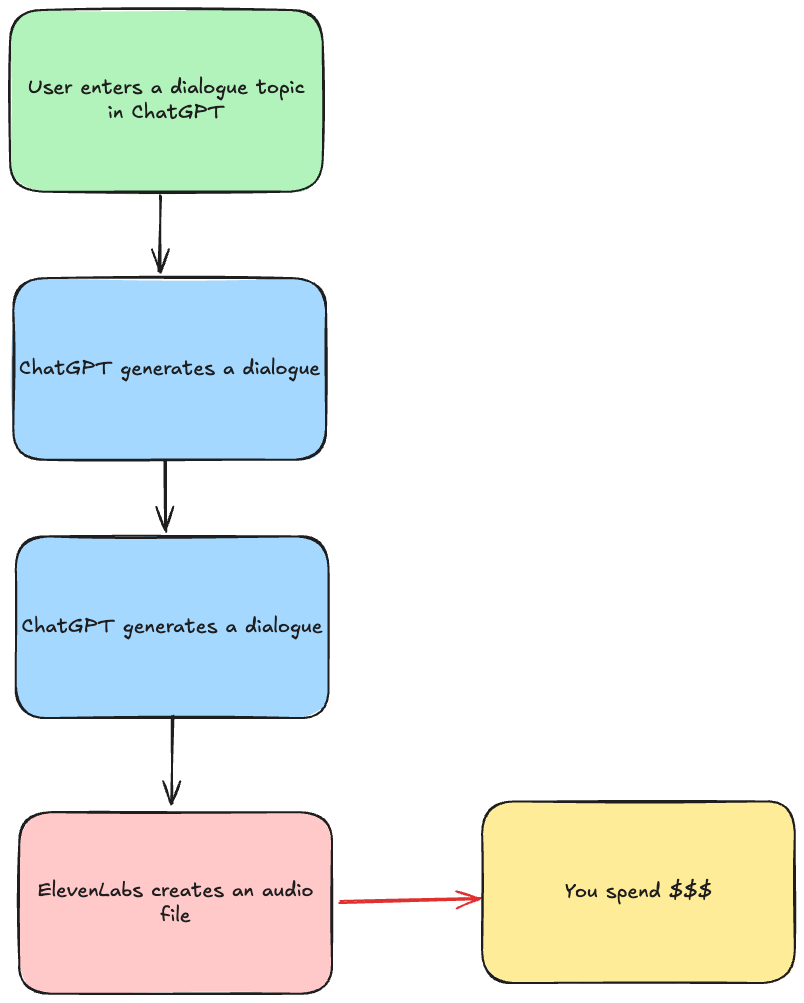

Enter MCP / ChatGPT apps

Now if you're building it as an MCP or a ChatGPT app (which runs over the MCP anyway). There you won't have to spend money on LLM tokens.

Why?

Because an MCP already runs within an LLM which provides it with input.

Again, how exactly - MCP tool input!

Your MCP tools / ChatGPT tools are called by an LLM. If you MCP tool accepts an input argument you can, actually have to even, provide an elaborate description of what kind of input you expect. And that input is produced by an LLM. Hence you don't need to call it inside of your MCP server and that's how you save money on LLM tokens. Here's an example description and input schema for that MCP server that generates audio dialogues for learning foreign languages.

{

title: "Generate Italian dialogue",

description: "Generates Italian dialogue",

inputSchema: {

dialogue: z

.array(

z.object({

personType: z

.enum(["adult-male", "adult-female"])

.describe(

"The type of the person (adult-male or adult-female)"

),

personName: z.string().describe("The name of the person"),

text: z

.string()

.describe("What that person says in the dialogue"),

})

)

.describe("Dialogue to generates"),

}

}

To be fair, it's quite a small input schema, if you want to see a larger one - have a look at the source code of the vibe music composing MCP.

By the way, I've written a few more articles / tutorials on building MCPs and ChatGPT apps. You can find them from the home page.

The opinions expressed herein are my own personal opinions and do not represent my employer's view in any way. My personal thoughts tend to change, hence the articles in this blog might not provide an accurate reflection of my present standpoint.

© Mike Borozdin